Testing Strategies

Testing Strategies

1. Importance of Testing Strategies

- Ensures correctness, completeness, and quality of software

- Identifies errors, gaps, and missing requirements

- Helps in reducing and removing errors to improve software quality

- Verifies if software meets specified requirements

2. Testing Techniques and Approaches

- Component-level testing to integration testing

- Different techniques suitable at different stages of testing

- Incremental testing approach for better effectiveness

- Involvement of both developers and independent test groups

3. Distinction between Testing and Debugging

- Testing focuses on finding errors and verifying requirements

- Debugging is the process of identifying and fixing errors

- Both activities are important but serve different purposes

Topic: Benefits of Software Testing

1. Cost-Effectiveness

- Identifying bugs early saves money in the long run

- Fixing issues in the early stages is less expensive

2. Security Enhancement

- Testing helps identify and remove risks and vulnerabilities

- Builds trust among users by ensuring reliable and secure software

3. Product Quality Assurance

- Testing ensures the delivery of a high-quality product

- Identifies and fixes issues before software deployment

4. Customer Satisfaction

- Testing aims to meet customer expectations and requirements

- Delivers a reliable and error-free software experience

Topic: Verification and Validation (V&V)

1. Verification

- Ensures that software meets specified requirements and standards

- Focuses on checking if the software is built correctly

2. Validation

- Evaluates the software against user needs and intended use

- Focuses on checking if the right product is being built

3. Differences between Verification and Validation

- Verification checks adherence to specifications

- Validation checks if the software meets user expectations

Topic: Unit Testing

1. Purpose and Scope of Unit Testing

- Focuses on verifying individual program units/components

- Tests internal processing logic and data structures

2. Key Aspects of Unit Testing

- Testing module interfaces, local data structures, and control flow

- Exercising all independent paths and boundary conditions

- Testing error-handling paths and robustness

3. Advantages of Unit Testing

- Helps understand functionality and usage of units

- Supports refactoring and regression testing

- Allows independent testing of project parts

4. Unit Testing Tools

- Examples: Junit, NUnit, JMockit, EMMA, PHPUnit

- Tools specifically designed for unit testing in different languages

Topic: Integration Testing

1. Purpose and Approach of Integration Testing

- Systematic technique to construct software architecture

- Uncovers errors in component integration and interface

Integration Testing Strategies:

1. Big Bang Approach:

- In the Big Bang approach, all components are integrated at once.

- Advantages:

- Quick integration of all components, allowing for faster testing.

- Suitable for smaller projects with fewer components.

- Disadvantages:

- Difficult to identify and isolate specific issues if failures occur.

- Dependencies between components may result in complex debugging.

2. Incremental Approach:

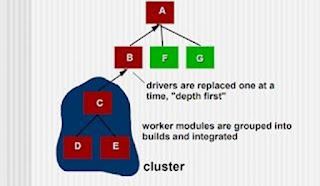

- The incremental approach involves integrating components in a step-by-step manner.

- Top-down Approach:

- Integration starts from the higher-level modules and progressively moves to lower-level modules.

- Advantages:

- Early identification of interface issues between major modules.

- Higher-level functionalities are tested first, aiding in the detection of critical issues.

- Disadvantages:

- Requires stubs or placeholder modules for lower-level components during early stages.

- Lower-level modules may remain untested until late in the testing process.

- Bottom-up Approach:

- Integration starts from the lower-level modules and gradually moves to higher-level modules.

- Advantages:

- Early detection of defects in individual components.

- Allows for incremental testing and identification of issues early on.

- Disadvantages:

- Higher-level functionalities may not be tested until late in the process.

- Requires drivers or mock modules for higher-level components during early stages.

- Sandwich Approach (Combination of Top-down and Bottom-up):

- Integration starts from both ends and meets in the middle.

- Advantages:

- Offers benefits of both top-down and bottom-up approaches.

- Allows for early detection of interface issues and individual component defects.

- Disadvantages:

- Complex planning and coordination are required.

- May require stubs and drivers for intermediate-level components.

3. Testing Clusters of Related Modules:

- Grouping related modules together for integration testing.

- Advantages:

- Focuses on testing interconnected modules that work together to deliver specific functionalities.

- Allows for targeted testing of critical functionality clusters.

- Disadvantages:

- May overlook integration issues between modules outside the tested clusters.

- Interactions between different clusters may not be adequately tested.

3. Advantages and Disadvantages of Integration Testing Strategies

- Big Bang: Simplicity, potential problems, saving resources

- Incremental: Fault localization, early prototype, limitations

4. Stubs and Drivers in Integration Testing

- Stubs and drivers act as substitutes for missing modules

- Stubs simulate data communication with calling modules

- Drivers coordinate test case input and output

Topic: Validation Testing

1. Purpose and Significance of Validation Testing

- Evaluates software against business requirements

- Demonstrates the product's intended use and suitability

2. Validation Testing Process

- Assessing software during development or at the end

- Verifying if

software meets user needs and expectations

3. User Acceptance Testing (UAT)

- Conducted by end-users or client representatives

- Tests usability, functionality, and compliance

4. Beta Testing

- Releasing software to a limited set of external users

- Collecting feedback and identifying any remaining issues

Regression Testing:

1. Purpose and Definition:

- Regression Testing ensures that recent code changes or modifications do not adversely affect existing functionalities.

- It is performed to validate that the old code continues to function correctly after introducing new changes or features.

2. Steps in Regression Testing:

a. Identify Code Changes:

- Determine the specific code modifications, enhancements, or bug fixes that have been made.

- Understand the scope of changes to assess potential impact on existing functionalities.

b. Select Test Cases:

- Choose a subset of relevant test cases from the existing test suite.

- Focus on test cases that cover the modified code and potentially affected areas.

c. Execute Test Cases:

- Run the selected test cases to verify the functionality and behavior of the modified code.

- Check if the expected outputs match the actual outputs.

d. Compare Results:

- Compare the actual test results with the expected results to identify any discrepancies.

- Analyze differences to determine if they indicate potential defects or regression issues.

e. Defect Resolution:

- If defects are identified, report them and work on fixing the issues.

- Debug the code, make necessary changes, and retest the fixed code to ensure proper resolution.

3. Test Case Management:

a. Test Suite Maintenance:

- Update the regression test suite with new test cases for the modified code.

- Remove or update obsolete test cases that are no longer applicable.

b. Test Prioritization:

- Prioritize test cases based on their importance, impact on critical functionalities, and areas prone to regression.

- Focus on high-priority test cases to ensure critical areas are thoroughly tested.

4. Automation:

- Consider automating regression tests to improve efficiency and repeatability.

- Utilize test automation tools and frameworks to automate test case execution and result comparison.

5. Incorporation into SDLC:

- Integrate regression testing into the software development lifecycle.

- Perform regular regression tests after each code change, feature addition, or system update.

- Ensure regression testing is part of the overall quality assurance process.

System Testing:

1. Purpose and Definition:

- System Testing aims to validate the fully integrated software product, ensuring that it meets end-to-end system specifications.

- It focuses on testing the interaction of software components with external peripherals and verifying the desired outputs.

2. Types of System Testing:

a. Alpha Testing:

- Internal teams perform alpha testing at the developer's site before releasing the software to external customers.

- It helps identify issues and gather feedback from internal users.

b. Beta Testing:

- End users conduct beta testing at their own sites to validate usability, functionality, compatibility, and reliability.

- Real users provide inputs into design, functionality, and usability, contributing to future product improvements.

c. Acceptance Testing:

- Acceptance testing ensures that the software system complies with business requirements and meets the necessary criteria for end-user delivery.

- It focuses on verifying the system's compliance with specified requirements.

d. Performance Testing:

- Performance testing evaluates system parameters, such as responsiveness, stability, scalability, reliability, and resource usage.

- It measures the quality attributes of the system under various workloads.

3. Testing Scope:

- Test the fully integrated application, including interactions between components and the system as a whole.

- Verify the correctness of inputs and corresponding desired outputs.

- Evaluate the user's experience with the application.

4. Test Execution:

- Develop comprehensive test cases that cover various scenarios and user interactions.

- Execute the test cases to validate the behavior and functionality of the entire system.

- Record and analyze the test results to identify any deviations from expected outcomes.

5. Test Environment:

- Set up a suitable test environment that closely resembles the production environment.

- Ensure the availability of necessary hardware, software, and network configurations for accurate testing.

Black Box Testing:

1. Definition and Overview:

- Black Box Testing is a software testing method that focuses on the external behavior of the software without considering its internal code structure.

- Testers have no knowledge of the internal implementation and base their testing on software requirements and specifications.

- It is also known as Behavioral Testing or Input-Output Testing.

2. Types of Black Box Testing:

a. Functional Testing:

- This type of black box testing verifies the functional requirements of the system.

- Testers validate whether the software functions as intended and meets the specified business logic.

b. Non-functional Testing:

- Non-functional testing focuses on validating the non-functional requirements of the software, such as performance, scalability, usability, and security.

- It ensures the software meets the desired quality attributes.

c. Regression Testing:

- Regression testing is performed after code fixes, upgrades, or system maintenance to ensure that new changes have not introduced defects in existing functionalities.

- Test cases are selected to cover affected areas and verify the unchanged parts of the software.

3. Testing Approach:

- Examine the software requirements and specifications.

- Design test cases based on valid inputs (positive test scenarios) and invalid inputs (negative test scenarios).

- Determine the expected outputs for each test case.

- Execute the test cases by providing the selected inputs to the software.

- Compare the actual outputs with the expected outputs.

- Report and track any deviations or defects found during testing.

4. Tools Used for Black Box Testing:

- Functional/Regression Testing Tools: QTP (QuickTest Professional), Selenium.

- Non-functional Testing Tools: LoadRunner, JMeter.

5. Advantages and Disadvantages:

- Advantages of Black Box Testing:

- Suitable for testing large code segments.

- Code access is not required, enabling testers with minimal programming knowledge to perform testing.

- Tester's perspective is separated from the developer's perspective, providing unbiased testing results.

- Disadvantages of Black Box Testing:

- Limited coverage, as only selected test scenarios are performed.

- Testers have limited knowledge about the application, potentially missing specific code segments or error-prone areas.

- Test case design can be challenging.

White Box Testing:

1. Definition and Overview:

- White Box Testing is a software testing technique that focuses on the internal structure, design, and code of the software.

- Testers have access to the internal workings of the software and examine the input-output flow, conditional loops, and individual statements, objects, and functions.

2. Objectives of White Box Testing:

a. Verify Internal Security:

- White box testing helps identify potential security vulnerabilities and weaknesses in the software's code.

- Testers can uncover security holes and ensure the software is resistant to unauthorized access and attacks.

b. Validate Code Structure and Flow:

- White box testing aims to ensure that the code follows proper coding standards and best practices.

- Testers verify the logical flow of the code and identify any broken or poorly structured paths.

c. Test Individual Statements and Functions:

- White box testing allows testers to test each statement, object, and function individually to ensure their correctness and desired behavior.

3. Testing Levels for White Box Testing:

- White box testing can be performed at different levels of software development, including:

a. System level: Testing the complete software system, including integrated components.

b. Integration level: Testing the interactions and interfaces between software modules.

c. Unit level: Testing individual units or components of the software.

4. White Box Testing Process:

a. Understand the code:

- Testers analyze and understand the software's internal code, structure, and design.

b. Design test cases:

- Testers create test cases to exercise different code paths and functionalities within the software.

- Test cases target specific inputs, conditions, and expected outputs.

c. Execute test cases:

- Test cases are executed by providing the selected inputs and observing the corresponding outputs.

- Testers validate whether the actual outputs match the expected outputs.

d. Debugging and issue resolution:

- If discrepancies or defects are found, testers debug the code to identify and fix the underlying issues.

- Test cases may be modified or added to address the identified problems.

5. White Box Testing Tools:

- EclEmma, NUnit, PyUnit, HTMLUnit, CppUnit are some commonly used white box testing tools.

- These tools assist in code coverage analysis, unit testing, and providing insights into the internal workings of the software.

Debugging:

1. Problem Identification and Report Preparation:

- Identifying and reproducing the issue: The first step in debugging is to understand and reproduce the problem reported by users or identified through testing.

- Gathering relevant information: Collecting data, logs, and any other useful information that can help in understanding the cause of the bug.

2. Assigning the Report and Verification:

- Assigning the bug report: The bug report is assigned to a software engineer responsible for verifying the reported bug and ensuring its validity.

- Verifying the bug: The assigned engineer performs tests and investigates the reported behavior to confirm the presence of the bug.

3. Defect Analysis:

- Modeling and documentation: Analyzing the software's design and documentation to understand the expected behavior and identify potential areas of error.

- Finding and testing candidate flaws: Inspecting the code and executing relevant test cases to narrow down potential causes and locate the exact flaw.

4. Defect Resolution:

- Making required changes: Implementing the necessary modifications in the software code to fix the identified bug.

- Iterative approach: Debugging often involves an iterative process of making changes, testing, and refining the code until the issue is resolved.

5. Validation of Corrections:

- Testing the fix: Conducting thorough testing to ensure that the bug has been successfully resolved without introducing new issues.

- Regression testing: Verifying that the bug fix did not impact previously working functionalities by retesting the affected and related areas.

Debugging Tools:

1. Code Debuggers:

- Radare2: An open-source reverse engineering framework and debugger that supports various platforms and architectures.

- WinDbg: A debugger provided by Microsoft for Windows applications and system-level debugging.

2. Memory Debuggers:

- Valgrind: A widely used memory debugging tool that helps detect memory leaks, invalid memory access, and other memory-related issues.

3. Profilers:

- Performance profilers: Tools like Gprof and Perf that help analyze the performance of the software by identifying bottlenecks and areas of optimization.

- Code coverage profilers: Tools such as gcov and JaCoCo that measure the extent to which the source code is executed during testing.

Difference between Debugging and Testing:

1. Objective:

- Testing: Focuses on evaluating the functionality, performance, and quality of the software by executing test cases.

- Debugging: Aims to identify and fix specific issues or bugs that have been encountered during testing or reported by users.

2. Timing:

- Testing: Performed throughout the software development lifecycle, from unit testing to system testing and beyond.

- Debugging: Begins after a bug has been identified and occurs during the development or maintenance phase.

3. Automation:

- Testing: Can be automated using various tools and frameworks to execute test cases and compare actual results with expected results.

- Debugging: Often requires manual intervention and analysis, although some debugging tools can assist in the process.

4. Knowledge and Skills:

- Testing: Requires knowledge of testing techniques, test case design, and understanding of test automation frameworks.

- Debugging: Demands in-depth knowledge of programming languages, software architecture, and debugging techniques to identify and fix bugs effectively.

5. Scope and Approach:

- Testing: Covers a broad range of activities, including functional testing, performance testing, security testing, and more.

- Debugging: Focuses specifically on troubleshooting and resolving identified defects or issues in the software.

Comments

Post a Comment